介绍:

分享几个月前编写的shell脚本,主要用于信息收集,当然还有一些,以及版本的迭代更新,后续有时间会持续更新。思路是来源于国外老哥nahamsec大家可以在github关注他。

shell脚本的优点

shell的语法和结构比较简单,易上手

shell是解释型语言,运行之前不需要编译

对于文本处理shell可以使用grep、jq、awk、sed等等,能够方便快捷地处理相当复杂的问题

程序开发的效率非常高,依赖于功能强大的命令可以迅速地完成开发任务Version 1.0

#!/bin/bash

subdomainThreads=10

dirsearchThreads=50

dirsearchWordlist=~/tools/dirsearch/db/dicc.txt

if [ ! -x "$(command -v jq)" ]; then

echo "[-] This script requires 'jq'. Exiting! More details please visit https://stedolan.github.io/jq/"

exit 1

fi

certficate(){

echo "Query all domain names: Certificate inquiry is runing"

curl https://www.threatcrowd.org/searchApi/v2/domain/report/?domain=$1 |jq .subdomains | grep -o "\w.*$1" > ./temp/$1.thr.txt

curl https://api.hackertarget.com/hostsearch/\?q\=$1 | grep -o "\w.*$1" > ./temp/$1.hac.txt

curl https://crt.sh/?q=%.$1 | grep "$1" | cut -d '>' -f2 | cut -d '<' -f1 | grep -v " " | sort -u > ./temp/$1.crt.txt

curl https://certspotter.com/api/v0/certs\?domain\=$1 | jq '.[].dns_names[]' | sed 's/\"//g' | sed 's/\*\.//g' | sort -u | grep -w $1\$ > ./temp/$1.cert.txt

curl https://www.virustotal.com/ui/domains/$1/subdomains\?limit\=40 | jq .data[].id | awk -F'\"' '{print $2}' > ./temp/$1.vir.txt

}

sublist3r(){

echo "Query all domain names: sublist3r is runing"

python ~/tools/Sublist3r/sublist3r.py -d $1 -t 10 -v -o ./temp/$1.sub3r.txt > /dev/null

cat ./temp/$1.sub3r.txt |grep -Eo "[a-zA-Z0-9][-a-zA-Z0-9]{0,62}(\.[a-zA-Z0-9][-a-zA-Z0-9]{0,62})+\.?" >./temp/$1.sub.txt

rm ./temp/$1.sub3r.txt

}

gitdomain(){

echo "Query all domain names: github-subdomains is runing"

python3 ~/tools/github-search/github-subdomains.py -d $1 -t github_key 2>&1 >> ./temp/$1.git.txt

}

alldomain(){

certficate $1

sublist3r $1

gitdomain $1

cat ./temp/$1.thr.txt >>./temp/$1.all.txt

cat ./temp/$1.hac.txt >>./temp/$1.all.txt

cat ./temp/$1.crt.txt >>./temp/$1.all.txt

cat ./temp/$1.cert.txt >>./temp/$1.all.txt

cat ./temp/$1.vir.txt >>./temp/$1.all.txt

cat ./temp/$1.sub.txt >>./temp/$1.all.txt

cat ./temp/$1.git.txt >>./temp/$1.all.txt

##输出一次所有的域名./temp/$1.alls.txt

cat ./temp/$1.all.txt |sort -u >./temp/$1.alls.txt

rm ./temp/$1.thr.txt&&rm ./temp/$1.hac.txt&&rm ./temp/$1.crt.txt&&rm ./temp/$1.cert.txt

rm ./temp/$1.vir.txt&&rm ./temp/$1.sub.txt&&rm ./temp/$1.git.txt&&rm ./temp/$1.all.txt

}

subdomain() { #this creates a list of all unique root sub domains

echo "subdomains is runing"

cat ./temp/$1.alls.txt |rev | cut -d "." -f 1,2,3 | sort -u | rev > ./$1.rootdomain.txt

}

hostalive(){ #Test if it is an available domain

echo "Probing for live hosts..."

cat ./temp/$1.alls.txt | sort -u | httprobe -c 50 >> ./temp/$1.repsponsive.txt

cat ./temp/$1.repsponsive.txt | sed 's/\http\:\/\///g' | sed 's/\https\:\/\///g' | sort -u | while read line; do

probeurl=$(cat ./temp/$1.repsponsive.txt | sort -u | grep -m 1 $line)

echo "$probeurl" >> ./$1.alive.txt

done

echo "$(cat ./$1.alive.txt | sort -u)" > ./$1.alive.txt

echo "${yellow}Total of $(wc -l ./$1.alive.txt | awk '{print $1}') live subdomains were found${reset}"

rm ./temp/$1.repsponsive.txt

}

namp(){

echo "nmap is runing"

nmap -iL ./temp/$1.alls.txt -sV -T3 -Pn -p3868,3366,8443,8080,9443,9091,3000,8000,5900,8081,6000,10000,8181,3306,5000,4000,8888,5432,15672,9999,161,4044,7077,4040,9000,8089,443,7447,7080,8880,8983,5673,7443,19000,19080 | grep -E 'Nmap scan report for|open|filtered|closed' >./$1.nmap.txt

}

wayback(){

echo "wayback is runing"

cat ./$1.alive.txt | waybackurls > ./wayback/$1.waybackurls.txt

cat ./wayback/$1.waybackurls.txt | sort -u | unfurl --unique keys > ./wayback/$1.paramlist.txt

[ -s ./wayback/$1.paramlist.txt ] && echo "Wordlist saved to ./wayback/$1.paramlist.txt"

cat ./wayback/$1.waybackurls.txt | sort -u | grep -P "\w+\.js(\?|$)" | sort -u > ./wayback/$1.jsurls.txt

[ -s ./wayback/$1.jsurls.txt ] && echo "JS Urls saved to ./wayback/$1.jsurls.txt"

cat ./wayback/$1.waybackurls.txt | sort -u | grep -P "\w+\.php(\?|$) | sort -u " > ./wayback/$1.phpurls.txt

[ -s ./wayback/$1.phpurls.txt ] && echo "PHP Urls saved to ./wayback/$1.phpurls.txt"

cat ./wayback/$1.waybackurls.txt | sort -u | grep -P "\w+\.aspx(\?|$) | sort -u " > ./wayback/$1.aspxurls.txt

[ -s ./wayback/$1.aspxurls.txt ] && echo "ASP Urls saved to ./wayback/$1.aspxurls.txt"

cat ./wayback/$1.waybackurls.txt | sort -u | grep -P "\w+\.jsp(\?|$) | sort -u " > ./wayback/$1.jspurls.txt

[ -s ./wayback/$1.jspurls.txt ] && echo "JSP Urls saved to ./wayback/$1.jspurls.txt"

}

dirsearcher(){

echo "Starting dirsearch..."

cat ./temp/$1.alls.txt | xargs -P$subdomainThreads -I % sh -c "python3 ~/tools/dirsearch/dirsearch.py -e php,asp,aspx,jsp,html,zip,jar -w $dirsearchWordlist -t $dirsearchThreads --simple-report=./dir/$1.only.txt --plain-text-report=./dir/$1.stacode.txt --json-report=./dir/$1.json.txt -u % | grep Target && tput sgr0 "

}

alldomain $1

subdomain $1

hostalive $1

# namp $1

wayback $1

dirsearcher $1Version 1.1

#!/bin/bash

#

dirsearcherThreads1=1

nmapThreads=5

dirsearchThreads=50

dirsearchWordlist=~/tools/dirsearch/db/dicc.txt

github_key=key

subdomainDicts=~/fuzzDicts/subdomainDicts/main.txt

#

if [ ! -x "$(command -v jq)" ]; then

echo "[-] This script requires 'jq'. Exiting! More details please visit https://stedolan.github.io/jq/"

exit 1

fi

gitdomain(){

echo -e "\033[1;33m github-subdomains is runing \033[0m"

python3 ~/tools/github-search/github-subdomains.py -d $1 -t $github_key 2>&1 >> ./$1/$foldername/temp/gitdomain.txt

}

massdns(){

echo -e "\033[1;33m massdns is runing \033[0m"

nohup ~/tools/massdns/scripts/subbrute.py $subdomainDicts $1 |nohup ~/tools/massdns/bin/massdns -r ~/tools/massdns/lists/resolvers.txt -t A -q -o S |awk -F '. ' '{print $1}' > ./$1/$foldername/temp/massdns1.txt

cat ./$1/$foldername/temp/massdns1.txt |sort -u |grep -E "[a-zA-Z0-9][-a-zA-Z0-9]{0,62}(\.[a-zA-Z0-9][-a-zA-Z0-9]{0,62})+\.?" > ./$1/$foldername/temp/massdns.txt

}

alldomain(){

gitdomain $1

massdns $1

cat ./$1/$foldername/temp/amass.txt >> ./$1/$foldername/temp/all.txt

cat ./$1/$foldername/temp/subfinder.txt >> ./$1/$foldername/temp/all.txt

cat ./$1/$foldername/temp/gitdomain.txt >> ./$1/$foldername/temp/all.txt

cat ./$1/$foldername/temp/massdns.txt >> ./$1/$foldername/temp/all.txt

##输出一次所有的域名./$1/$foldername/temp/alls.txt

cat ./$1/$foldername/temp/all.txt |sort -u > ./$1/$foldername/alls.txt

cat ./$1/$foldername/alls.txt > ./$1.alls.txt

}

subdomain() { #this creates a list of all unique root sub domains

echo -e "\033[1;33m subdomain is runing \033[0m"

cat ./$1/$foldername/alls.txt |rev | cut -d "." -f 1,2,3 | sort -u | rev > ./$1/$foldername/rootdomain.txt

}

hostalive(){ #Test if it is an available domain

echo -e "\033[1;33m hostalive is runing \033[0m"

cat ./$1/$foldername/alls.txt | sort -u | httprobe -c 50 >> ./$1/$foldername/temp/repsponsive.txt

cat ./$1/$foldername/temp/repsponsive.txt | sed 's/\http\:\/\///g' | sed 's/\https\:\/\///g' | sort -u | while read line; do probeurl=$(cat ./$1/$foldername/temp/repsponsive.txt | sort -u | grep -m 1 $line)

echo "$probeurl" >> ./$1/$foldername/alive.txt

done

echo "$(cat ./$1/$foldername/alive.txt | sort -u)" > ./$1/$foldername/alive.txt

echo "${yellow}Total of $(wc -l ./$1/$foldername/alive.txt | awk '{print $1}') live subdomains were found${reset}"

}

nmap(){

echo -e "\033[1;33m nmap is runing \033[0m"

cat ./$1.alls.txt | xargs -P$nmapThreads -I % sh -c "nmap -sV -T3 -Pn -p3868,3366,8443,8080,9443,9091,3000,8000,5900,8081,6000,10000,8181,3306,5000,4000,8888,5432,15672,9999,161,4044,7077,4040,9000,8089,443,7447,7080,8880,8983,5673,7443,19000,19080 % | grep -E 'Nmap scan report for|open|filtered|closed'" >> ./$1/$foldername/nmap.txt

}

wayback(){

echo -e "\033[1;33m wayback is runing \033[0m"

cat ./$1/$foldername/alive.txt | waybackurls > ./$1/$foldername/wayback/waybackurls.txt

cat ./$1/$foldername/wayback/waybackurls.txt | sort -u | unfurl --unique keys > ./$1/$foldername/wayback/paramlist.txt

[ -s ./$1/$foldername/wayback/paramlist.txt ] && echo "Wordlist saved to ./$1/$foldername/wayback/paramlist.txt"

cat ./$1/$foldername/wayback/waybackurls.txt | sort -u | grep -P "\w+\.js(\?|$)" | sort -u > ./$1/$foldername/wayback/jsurls.txt

[ -s ./$1/$foldername/wayback/jsurls.txt ] && echo "JS Urls saved to ./$1/$foldername/wayback/jsurls.txt"

cat ./$1/$foldername/wayback/waybackurls.txt | sort -u | grep -P "\w+\.php(\?|$) | sort -u " > ./$1/$foldername/wayback/phpurls.txt

[ -s ./$1/$foldername/wayback/phpurls.txt ] && echo "PHP Urls saved to ./$1/$foldername/wayback/phpurls.txt"

cat ./$1/$foldername/wayback/waybackurls.txt | sort -u | grep -P "\w+\.aspx(\?|$) | sort -u " > ./$1/$foldername/wayback/aspxurls.txt

[ -s ./$1/$foldername/wayback/aspxurls.txt ] && echo "ASP Urls saved to ./$1/$foldername/wayback/aspxurls.txt"

cat ./$1/$foldername/wayback/waybackurls.txt | sort -u | grep -P "\w+\.jsp(\?|$) | sort -u " > ./$1/$foldername/wayback/jspurls.txt

[ -s ./$1/$foldername/wayback/jspurls.txt ] && echo "JSP Urls saved to ./$1/$foldername/wayback/jspurls.txt"

}

dirsearcher(){

echo "Starting dirsearch..."

cat ./$1.alls.txt | xargs -P$dirsearcherThreads1 -I % sh -c "python3 ~/tools/dirsearch/dirsearch.py -e php,asp,aspx,jsp,html,zip,jar -w $dirsearchWordlist -t $dirsearchThreads -u % " >> ./$1/$foldername/dirsearcher.txt

}

cleantemp(){

rm ./$1/$foldername/temp/amass.txt

rm ./$1/$foldername/temp/subfinder.txt

rm ./$1/$foldername/temp/gitdomain.txt

rm ./$1/$foldername/temp/all.txt

rm ./$1/$foldername/temp/repsponsive.txt

rm ./$1.alls.txt

rm ./$1/$foldername/temp/massdns1.txt

rm ./$1/$foldername/temp/massdns.txt

rm ./$1/$foldername/temp/amass1.txt

}

logo(){

echo -e "\033[0;31m

_____

| __ \

| |__) |___ ___ ___ _ __

| _ // _ \/ __/ _ \| '_ \

| | \ \ __/ (_| (_) | | | |

|_| \_\___|\___\___/|_| |_|

\033[0m"

}

amass(){

echo -e "\033[1;33m amass is runing \033[0m"

nohup amass enum -d $1 > ./$1/$foldername/temp/amass1.txt

cat ./$1/$foldername/temp/amass1.txt | grep -Eo "[a-zA-Z0-9][-a-zA-Z0-9]{0,62}(\.[a-zA-Z0-9][-a-zA-Z0-9]{0,62})+\.?" | grep -Eo ".*$1\>" |sort -u > ./$1/$foldername/temp/amass.txt

}

main(){

clear

logo

if [ -d "./$1" ]

then

echo "This is a known target."

else

mkdir ./$1

fi

[ ! -d "./$1/$foldername" ] && mkdir ./$1/$foldername

[ ! -d "./$1/$foldername/temp/" ] && mkdir ./$1/$foldername/temp/

[ ! -d "./$1/$foldername/wayback/" ] && mkdir ./$1/$foldername/wayback/

[ ! -d "./$1/$foldername/dirsearcher/" ] && mkdir ./$1/$foldername/dirsearcher/

touch ./$1/$foldername/nmap.txt

### enum subdomain

#:'

amass $1

echo -e "\033[1;33m subfinder is runing \033[0m"

nohup subfinder -d $1 -o ./$1/$foldername/temp/subfinder.txt

alldomain $1

subdomain $1

hostalive $1

#'

### endport searcher

#:'

nmap $1

wayback $1

dirsearcher $1

#'

cleantemp $1

}

todate=$(date +"%Y-%m-%d")

# path=$(pwd)

foldername=recon-$todate

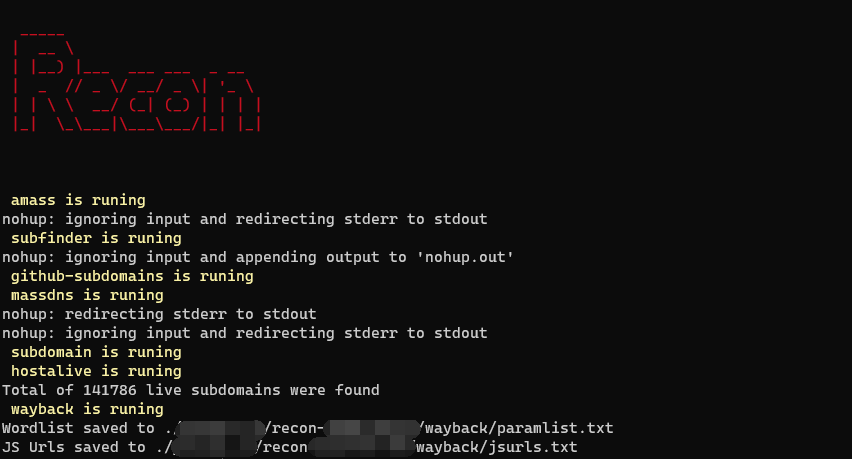

main $1执行效果:

说明:如果直接使用上面的脚本是没有什么效果的,需要配置一些环境,本脚本只是提供一些思路,完全可以按照自己的信息收集框架去对应的编写脚本,也不止可以用于信息收集这个方面。